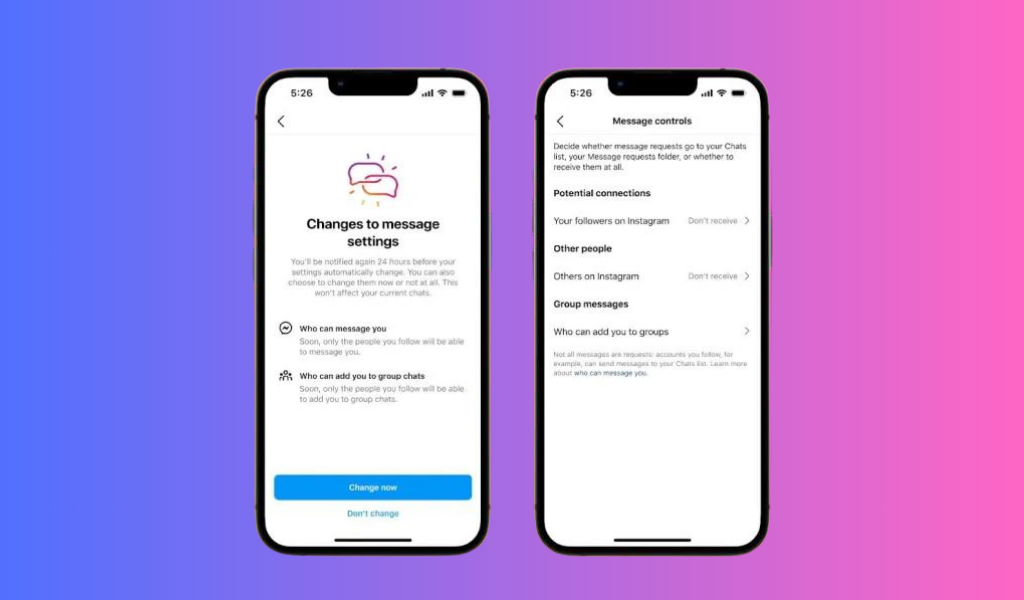

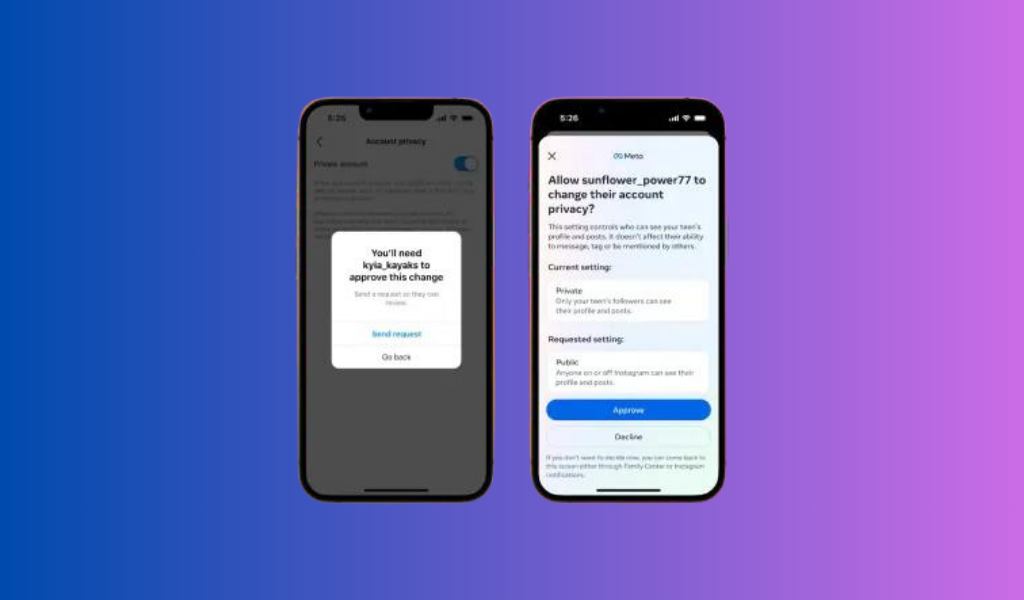

Meta’s latest updates to teen messaging controls and parental options demonstrate the company’s dedication to prioritizing the safety and well-being of young users on Facebook and Instagram. By implementing tighter restrictions on unsolicited messaging and empowering guardians with more control over privacy settings, Meta is taking proactive steps to protect teens from potential risks and inappropriate content.

These efforts, coupled with the company’s commitment to preventing self-harm, eating disorders, and child sexual abuse material, showcase Meta’s ongoing commitment to creating a safer online space for all users, especially the younger generation. As we move forward, it is crucial for social media platforms to continue evolving and implementing measures that prioritize the mental health and safety of their users, and Meta’s initiatives serve as a positive example in this regard.